Event-driven communication systems (Message Brokers) enable loose coupling between services and components within an organization or project while ensuring asynchronous communication, scalability, high throughput, reliability, and the security of transmitted data.

Apache Kafka is one of the most popular message brokers, known for its performance and widely used by companies like Netflix, Uber, and LinkedIn. But does it truly meet these expectations? Does it ensure data security, scalability, and reliability? Does it work optimally right after installation, or does it require additional configuration?

Apache Kafka architecture

In this section, I will present and briefly discuss the components that make up a typical Apache Kafka installation. Understanding how they work will make it easier to analyze Kafka’s functionality and configuration options further.

What are the core components of Apache Kafka?

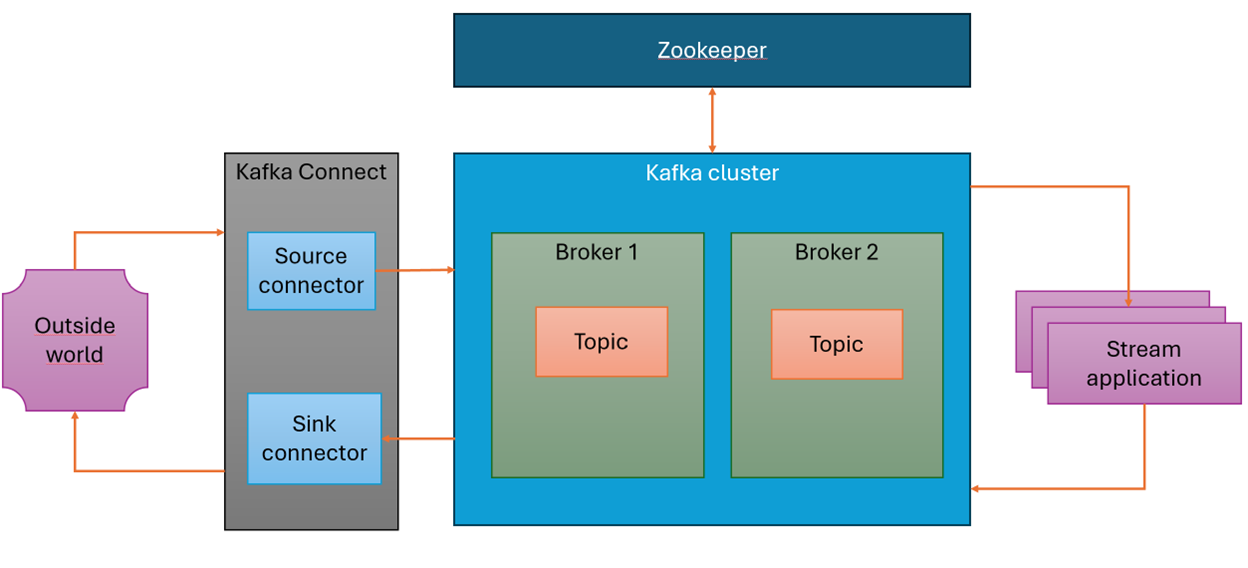

The above diagram illustrates the typical components of an Apache Kafka installation. The key element is the Kafka cluster, which consists of brokers—servers responsible for storing and processing data.

The cluster is managed by Apache Zookeeper (starting from version 2.8.0, an alternative solution called Kafka Kraft was introduced, eliminating the need for Zookeeper; as of version 3.5.0, Zookeeper has been marked as deprecated).

Each broker stores data in topics, which are divided into partitions. Partitioning plays a crucial role in Kafka’s scalability and performance, as it allows multiple consumers to process data in parallel.

What is partitioning and message order?

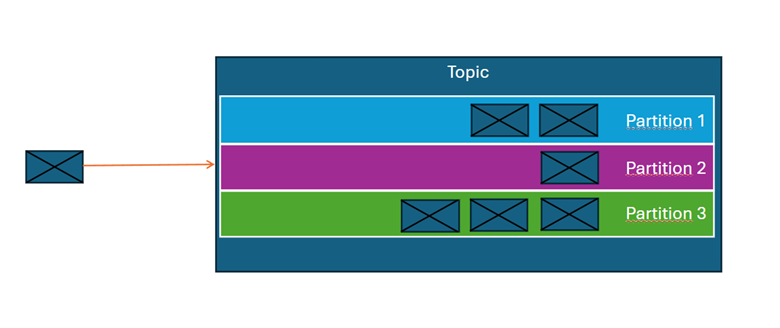

When writing messages to a topic, Kafka assigns them to a specific partition based on one of two methods:

- Partition key – If a key is specified, all messages with the same key are sent to the same partition.

- Round-robin algorithm – If no key is specified, Kafka distributes messages evenly across available partitions.

Within a single partition, Kafka guarantees message order (based on the time of writing). However, it does not guarantee global order across different partitions within the same topic.

Each message in a partition has a unique identifier called an offset, which allows consumers to track and manage the processing of data.

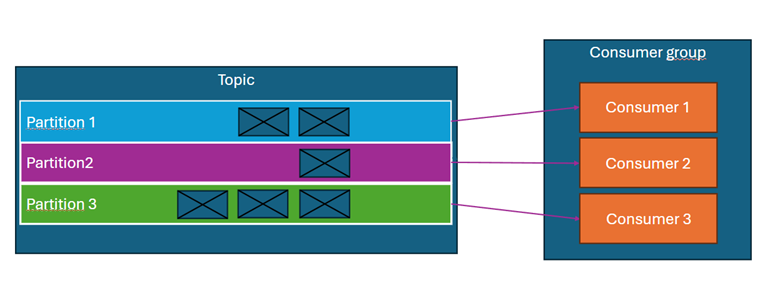

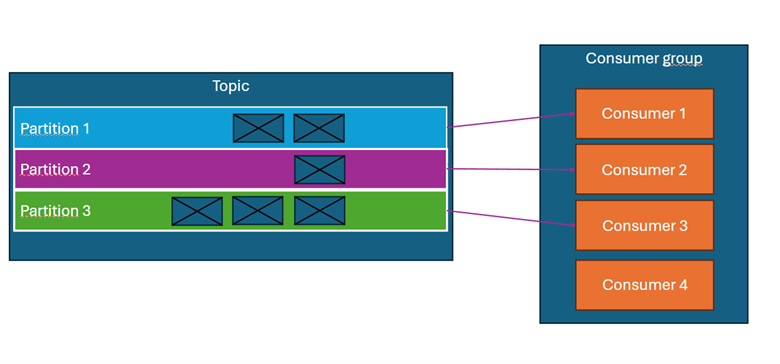

What is a consumer group and parallel processing?

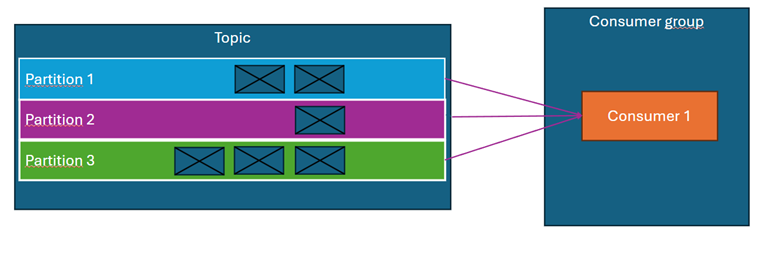

Kafka consumers are assigned to consumer groups. Each consumer in a group reads messages from one or more partitions, but no partition can be handled by more than one consumer within the same group.

Examples:

- The topic has 3 partitions, and the consumer group consists of a single consumer → the consumer receives messages from all partitions. (Diagram above)

- The topic has 3 partitions, and the group consists of 3 consumers → each consumer receives messages from one partition. (Diagram above).

- The topic has 3 partitions, and the group consists of 4 consumers → one of the consumers remains inactive. (Diagram above).

The consumer group can be thought of as a Spring Boot or Quarkus application, while an individual consumer can be considered a thread within that application. Therefore, when planning the throughput of a deployed system, the number of partitions in topics should be appropriately chosen—the number of partitions determines the maximum number of threads that can process messages in parallel.

The role of a broker in a Kafka Cluster

Each broker performs several key functions:

- Leading partitions – A broker can act as the leader for selected partitions and handle their read/write operations.

- Data replication – The broker transfers partition data to other servers in the cluster, ensuring redundancy.

- Managing consumer offsets (consumer offset = the point where a given consumer has finished reading a message) – The broker stores information on which messages have already been read.

- Handling data retention in topics – The broker controls the deletion of older data according to the retention policy set for a given topic in a partition.

What is Kafka Connect? How does Kafka integrate with other systems?

Another component of the architecture is Kafka Connect. It is a mechanism that enables Kafka to integrate with other systems, allowing communication with external sources. It consists of two types of connectors:

- Source – Connectors that read data from source systems (e.g., databases, file systems, S3) and send them to Kafka.

- Sink – Connectors that retrieve/read data from specified Kafka topics and write them to target systems (e.g., databases, file systems, S3).

There are many ready-to-use connectors available on the market. The most popular providers include:

- Confluent → Confluent connectors (some require a license).

- Camel → Apache Camel connectors (Apache 2.0 license).

- Lenses.io → Lenses.io connectors (Open Source license).

It is worth noting that in the case of Confluent, the license may vary depending on the connector—some require payment, while others are open source. Sometimes, even the source and sink connectors for the same system may have different licenses.

An interesting option is Lenses.io connectors, which offer, among other things, a JMS connector—we have used it in one of our projects.

The role of stream applications – data processing in Kafka

The last component shown in the architecture diagram is Stream Application. This is a component that fetches data from a topic, processes it, and writes it to a target topic. It acts as both a producer and a consumer of messages.

Stream Applications can be developed using various frameworks, such as:

- Spring Boot

- Quarkus

Typical operations performed by Stream Applications include:

- Message transformations

- Calculations

- Aggregation, merging messages from multiple topics

The last component shown in the architecture diagram is Stream Application. It is a component that retrieves data from a topic, processes it, and sends it to a target topic. In other words, it combines the functionalities of a Kafka consumer and producer.

These applications can be developed using different frameworks such as Spring Boot or Quarkus, and they can perform message transformations, calculations, aggregations, and merging messages from multiple topics.

The components discussed above form the foundation of Apache Kafka’s architecture. In the following sections, we will examine their configuration and optimization.

Kafka has extensive documentation, and many additional resources can be found online. For example, it is worth exploring elements such as Schema Registry, which enables managing schemas of messages transmitted in Kafka—this was not discussed above.

Functional areas of Apache Kafka

Before diving into a detailed analysis, it is worth discussing the key functional areas that a message broker should provide:

- Deployment and Configuration – The broker (or the surrounding ecosystem of tools) should enable easy deployment of components, queue management, access control, and flexible system configuration.

- Monitoring – A crucial aspect of a broker’s operation (or its ecosystem) is the ability to monitor components and collect key metrics, such as throughput, resource utilization (CPU/RAM), queue size, and other operational parameters.

- Security – The broker should support secure data transmission protocols and implement authentication and authorization mechanisms to control access to queues and operations.

- High Availability and Disaster Recovery – The broker should support mechanisms that ensure continuous operation, such as data replication, disaster recovery strategies, and backup creation.

- High Message Processing Performance – The broker should provide high-throughput message processing while optimally utilizing system resources (CPU/RAM).

In the upcoming sections, we will analyze these areas in the context of Apache Kafka, discussing its capabilities and highlighting elements that are important to consider to avoid potential issues.

Deploying and Configuring Kafka

Apache Kafka offers various installation options. In our projects, we most commonly deploy Kafka on Kubernetes clusters or RedHat OpenShift, as these provide high availability and security (a detailed discussion of these aspects is beyond the scope of this article).

One of the most popular ways to install Apache Kafka on OpenShift is Strimzi (strimzi.io), which follows the Operator Pattern.

Strimzi introduces a set of dedicated resources (Custom Resource Definitions – CRDs) that allow for describing the installation of a Kafka cluster, Kafka Connect, connectors, defining topics, and managing access permissions.

One of the main advantages of Strimzi is that it significantly simplifies the installation of the entire Apache Kafka ecosystem, which—as discussed in the architecture section—consists of multiple components.

In the examples below, we use Helm templates (helm.sh) to define resources for an OpenShift cluster.

Installing an Apache Kafka Cluster

The fundamental CRD is the Kafka CRD, which allows for the installation of a Kafka cluster:

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: {{ include "broker.fullname" . }}

labels:

{{- include "broker.labels" . | nindent 4 }}

spec:

kafka:

resources: (1)

requests:

cpu: {{ .Values.kafka.resources.requests.cpu }}

memory: {{ .Values.kafka.resources.requests.memory }}

limits:

cpu: {{ .Values.kafka.resources.limits.cpu }}

memory: {{ .Values.kafka.resources.limits.memory }}

version: 3.4.0

replicas: {{ .Values.kafka.replicas}} (2)

…

In (1), we specify the compute resources for Kafka brokers, such as the requested CPU/RAM and CPU and RAM limits.

In (2), we define the number of brokers that will form the Apache Kafka cluster.

In the same CRD, we can also specify the installation parameters for Zookeeper.

zookeeper:

resources: (1)

requests:

cpu: {{ .Values.zookeeper.resources.requests.cpu }}

memory: {{ .Values.zookeeper.resources.requests.memory }}

limits:

cpu: {{ .Values.zookeeper.resources.limits.cpu }}

memory: {{ .Values.zookeeper.resources.limits.memory }}

replicas: {{ .Values.zookeeper.replicas }} (2)

In (1), we specify the CPU/RAM resources allocated for Zookeeper, and in (2), the number of its instances.

Additionally, in the same CRD, we can configure disk parameters for Apache Kafka as well as detailed configuration settings.

storage: (1)

type: jbod

volumes:

- id: 0

type: persistent-claim

size: {{ .Values.kafka.storage.size }}

deleteClaim: true

class: {{ .Values.kafka.storage.class }}

- id: 1

type: persistent-claim

size: {{ .Values.kafka.storage.size }}

deleteClaim: true

class: {{ .Values.kafka.storage.class }}

config: (2)

message.max.bytes:{{ .Values.kafka.config.messageMaxBytes }} (3)

num.partitions:{{.Values.kafka.config.defaultNumPartitions }}(4)

log.retention.hours:{{.Values.kafka.config.logRetentionHours}}(5)

num.network.threads: {{.Values.kafka.config.networkThreads }} (6)

num.io.threads: {{.Values.kafka.config.ioThreads }} (7)

In (1), there is the storage definition for the Apache Kafka cluster being created.

In (2), we define the detailed configuration parameters for the broker. In the above example, several basic parameters are specified, such as:

- (3) maximum message size,

- (4) default number of partitions,

- (5) default retention period for topics,

- (6) number of network threads,

- (7) number of I/O threads.

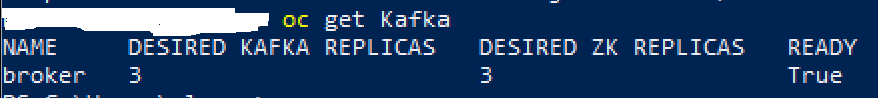

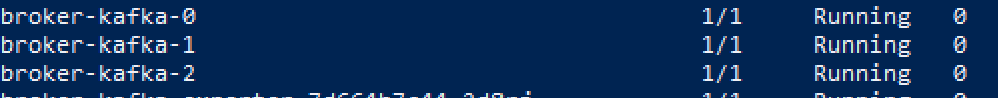

After loading the above configuration into the Kubernetes or OpenShift cluster and processing it through the Strimzi operator, we will obtain a fully functional Apache Kafka cluster managed by Zookeeper.

Its operation can be verified using the appropriate tools:

After listing the PODs, we will see both brokers and Zookeeper:

The next essential component for installation is Kafka Connect, which can be achieved using another CRD provided by Strimzi, called KafkaConnect:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnect

metadata:

name: connect-cluster-{{ include "broker.fullname" . }}

annotations:

strimzi.io/use-connector-resources: "true"

spec:

image:{{ .Values.kafkaConnect.image.repository}}:{{ .Values.kafkaConnect.image.tag}}

replicas: {{ .Values.kafkaConnect.replicas}} (1)

bootstrapServers: {{ include "broker.fullname" . }}-kafka-bootstrap:9093 (2)

In (1), we define the number of instances that make up the Kafka Connect cluster, and in (2), we specify the reference to the Apache Kafka cluster.

The KafkaConnect CRD also allows us to define other elements, such as security settings, storage, resource allocation, and Zookeeper configuration parameters.

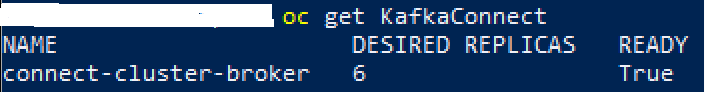

After uploading this CRD to the OpenShift cluster, we will obtain a fully functional Kafka Connect cluster, which can be verified using the following command:

In this way, we have efficiently and quickly installed the Apache Kafka cluster and Kafka Connect. The final step is to deploy a sample topic and a sample connector.

Let’s start with the topic definition, for which Strimzi provides a dedicated CRD – KafkaTopic:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaTopic

metadata:

name: {{ .Values.kafkaTopics.file.data.name }} (1)

labels:

strimzi.io/cluster: {{ .Values.kafkaTopics.cluster }}

spec:

partitions: {{.Values.kafkaTopics.file.data.partitions }} (2)

replicas: {{.Values.kafkaTopics.file.data.replicas }} (3)

config:

retention.ms:{{.Values.kafkaTopics.file.data.config.retention}} (4)

This simple file allows us to specify:

- (1) The name of the topic.

- (2) The number of partitions for the topic – if not specified, Kafka will use the default value configured at the broker level (as described in the CRD for the Kafka cluster).

- (3) The number of replicas for the topic – determines how many copies of the data should be stored in the cluster.

- (4) The retention period for the topic – the duration for which data is stored before being deleted. Messages older than the specified value will be removed.

The retention parameter is crucial for Kafka cluster disk space management. Different topics can have different retention values depending on specific needs—topics with greater business importance can be assigned longer retention periods.

Now, let’s prepare a sample connector definition using the KafkaConnector CRD.

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnector

metadata:

name: jmssourceconnector-{{ $connector.name }}

labels:

strimzi.io/cluster: {{ $.Values.kafkaConnectors.kccluster }}

spec:

class: com.datamountaineer.streamreactor.connect.jms.source.JMSSourceConnector (1)

tasksMax: {{ .Values.connector.tasksMax }} (2)

autoRestart:

enabled: {{ $.Values.connector.autoRestart }} (3)

config: (4)

connect.jms.connection.factory: {{ .Values.connector.connectionFactory }}

connect.jms.destination.selector: {{ .Values.connector.selector }}

connect.jms.initial.context.factory:{{.Values.connector.contextFactoryClass }}

connect.jms.kcql: INSERT INTO test_topic SELECT * FROM {{ .Values .connector.queue }} WITHTYPE QUEUE

connect.jms.queues: {{ .Values.connector.queue }}

connect.jms.url: {{ .Values.connector.urls }}

connect.progess.enabled: {{ .Values.connector.progessEnabled }}

connect.jms.scale.type: {{ .Values.scaleType }}

connect.jms.username: {{ .Values.username }}

connect.jms.password: {{ .Values.password }}

This file defines a JMS source connector, which is responsible for reading data from a JMS queue and passing it to a Kafka topic:

- (1) The class implementing the connector – specifies the component that will be launched to receive messages from the JMS server. Each connector has its own implementation in the form of JAR files provided by the connector vendor. These files must be present in the Kafka Connect cluster to be used at runtime.

- (2) The number of connector tasks – specifies the number of task instances running in the Kafka Connect cluster. This parameter allows controlling the number of parallel instances, which affects the data processing throughput.

- (3) Connector configuration parameters – specific to the given implementation. These should be set according to the documentation provided by the connector vendor. In the provided example, these are the parameters required to connect to an external JMS server.

In this way, we have completed the process of installing the Apache Kafka cluster and Kafka Connect, as well as deploying a sample topic and a sample connector.

As we can see, Kafka offers many configuration possibilities, and Strimzi allows defining them using dedicated CRDs. The use of Helm makes it possible to parameterize these CRDs (through dedicated Values files), allowing for maintaining multiple configurations, e.g., for development, testing, and production environments.

One of the key advantages of this approach to managing the installation and configuration of the Kafka cluster is the ability to store configuration files (templates) in a version control system—these are YAML files stored in a dedicated repository. This makes it possible to easily audit changes and track modification history.

This configuration can then be deployed to environments using pipelines in tools such as GitLab or Jenkins. Additionally, it is possible to use automatic synchronization systems, such as ArgoCD (https://argo-cd.readthedocs.io/en/stable/), which monitor the repository for changes and automatically propagate them to target environments.

After discussing the deployment and configuration process of Kafka, let’s now move on to the topic of security.

Security of the Event-Driven Communication System

Every system designed for event-driven communication should ensure the security of transmitted data, including encryption, authentication, and authorization. In this regard, Apache Kafka, according to the official documentation, offers the following security mechanisms:

- Authentication of client connections to the broker using protocols such as SSL or SASL.

- Authentication of communication between the broker and Zookeeper.

- Encryption of transmitted data – both between clients and the broker and between brokers during replication.

- Authorization of operations performed by clients to control access to topics and resources.

Adapting Apache Kafka to meet security requirements requires configuring many parameters. For example, it is necessary to configure a secure listener:

listeners=BROKER://localhost:9092

listener.security.protocol.map=BROKER:SASL_SSL,CONTROLLER:SASL_SSL

and generating SSL keys using tools such as keytool. This process requires special attention and focus, but it is definitely worth implementing to ensure full security of transmitted data.

If Kafka is deployed on a Kubernetes or OpenShift cluster and uses Strimzi, authentication configuration becomes easier. Strimzi automates many processes, providing appropriate entries in Custom Resource (CR) files, as well as additional CRDs (Custom Resource Definitions) that simplify security management.

Let’s start with the authentication configuration for the broker – the file defining the Kafka cluster includes the following options:

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: {{ include “broker.fullname” . }}

labels:

{{- include “broker.labels” . | nindent 4 }}

spec:

kafka:

…

listeners: (1)

– name: tls (2)

port: 9093

type: internal

tls: true

authentication:

type: tls

– name: external (3)

port: 9094

type: route

tls: true

authentication:

type: tls

…

- (1) Definition of listeners.

- (2) Internal listener (for the OpenShift cluster) running on port 9093, with TLS enabled and certificate-based authentication.

- (3) External port 9094 (exposed via an OpenShift route) with TLS enabled, but without certificate-based authentication.

After loading this configuration, the Strimzi operator will automatically generate the appropriate OpenShift secrets, which will be used for managing authentication and encrypting communication:

If we now look at the previously prepared and deployed Kafka Connect cluster, we will notice that it will not be able to connect to the Kafka broker. To restore communication between these components, it is necessary to adjust the Kafka Connect configuration, taking into account security aspects.

For this purpose, the appropriate changes must be made in the Custom Resource (CR) file for KafkaConnect:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnect

metadata:

name: connect-cluster-{{ include “broker.fullname” . }}

spec:

rack:

…

bootstrapServers:{{include “broker.fullname” .}}-kafka-bootstrap:9093

authentication: (1)

type: tls

certificateAndKey:

secretName: kafka-connect-tls-user

certificate: user.crt

key: user.key

tls: (2)

trustedCertificates:

– secretName: {{ include “broker.fullname” . }}-cluster-ca-cert

certificate: ca.crt

- (1) Here, we define how Kafka Connect will connect and authenticate with the Kafka broker. We specify the use of the TLS protocol and indicate that Kafka Connect should “present itself” using a certificate.

- (2) Here, we configure how Kafka Connect will “trust” the certificate that the Kafka broker uses for authentication when establishing the connection. It refers to the OpenShift secret, which was generated by the Strimzi operator in the previous step.

However, a key question remains: Where does Kafka Connect get the certificate in section (1)? After all, we have not defined the kafka-connect-tls-user secret anywhere.

The answer is simple—this certificate represents a user or application that connects to the Kafka broker. Strimzi provides a dedicated CRD named KafkaUser, which allows defining such a user:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaUser

metadata:

name: kafka-connect-tls-user

labels:

strimzi.io/cluster: {{ include “broker.fullname” . }}

spec:

authentication: (1)

type: tls

authorization:(2)

type: simple

acls:

– resource: (3)

type: topic (3.1)

name: event-data (3.2)

patternType: literal (3.3)

operations: (3.4)

– Create

– Describe

– DescribeConfigs

– Read

– Write

host: “*” (3.5)

# consumer group aws s3 sink connector

– resource:

type: group (4)

name: connect-sinkconnector

patternType: literal

operations:

– Create

– Describe

– Read

– Write

host: “*”

Let’s take a look at the contents of this file:

- (1) We define TLS authentication for the created user.

- (2) We specify the user’s permissions – this is a list of elements defining access to resources.

- (3) We define the first resource of type topic:

- (3.1) We specify the resource type as a topic.

- (3.2) We set its name.

- (3.3) We indicate that the name should be treated literally – it is also possible to use the prefix value, which allows assigning permissions to all resources whose name starts with a specified string.

- (3.4) We list the operations that the user is allowed to perform.

- (3.5) We specify the address/host from which the user can connect.

- (4) We define another resource type – a consumer group with a specific name and a list of allowed operations for that group.

After loading this definition, the Strimzi operator will automatically generate the appropriate secret, which Kafka Connect will use for authentication with the broker, and which will be recognized by the broker:

If an application (KafkaUser) using such a certificate attempts to perform an unauthorized operation on the broker (i.e., one that we did not include in the KafkaUser definition), the broker will automatically block it.

In the logs, we will then see an error message:

2024-10-02 13:42:21,932 INFO Principal = User:CN=kafka-connect-tls-user is Denied Operation = Describe from host = 10.253.23.147 on resource = Cluster:LITERAL:kafka-cluster for request = ListPartitionReassignments with resourceRefCount = 1

The above error indicates that the user kafka-connect-tls-user does not have permission to retrieve cluster information. This is the expected behavior since we did not grant this access in the configuration.

To resolve this error, we need to add the appropriate permission to the configuration file:

– resource:

type: cluster

operations:

– Describe

host: “*”

In the provided example, a new resource type appears, representing the Kafka cluster.

As we can see, Apache Kafka offers extensive security configuration capabilities—ranging from authentication and encryption to authorization. If we use Strimzi, configuring these mechanisms becomes significantly easier, as many elements are automatically generated by the Strimzi operator.

We have thus confirmed that, in terms of security, Apache Kafka is an enterprise-class solution that meets the requirements of even the most stringent security departments.

Now, let’s move on to analyzing Kafka’s high availability and the methods for ensuring its reliability.

High Availability and Disaster Recovery

Event-driven communication systems are responsible for transmitting messages between multiple systems and services. The more components rely on such communication, the more critical the broker’s role becomes in the entire information flow.

But what happens if a broker fails? Could it become a Single Point of Failure (SPOF)?

The answer is: Yes—if the system is poorly deployed and configured, it can become a SPOF.

How does Apache Kafka address this challenge?

The primary mechanism ensuring high availability in Kafka is replication.

Replication means that partition data from a topic is stored across multiple nodes in the cluster. If one of the nodes fails, its role can be taken over by other nodes, since the data is replicated.

The diagram below illustrates this process:

The Kafka cluster consists of three brokers. In the diagram below, partition leaders are marked in blue.

For example, Broker 1 serves as the leader of partition 0 in topic 1 and is responsible for replicating its data to other nodes in the cluster:

- Broker 2 is the first replica of partition 0 in topic 1 (marked in orange).

- Broker 3 is the second replica of partition 0 in topic 1 (marked in green).

Broker 2 and Broker 3 are called in-sync replicas (ISR), meaning they stay synchronized with the leader.

If Broker 1 fails, one of the in-sync replicas will automatically be elected as the new leader for partition 0 of topic 1, ensuring that clients can continue using the topic without interruption. Thanks to this mechanism, Kafka provides failure resilience.

Additionally, if Kafka is deployed on an OpenShift cluster, which is distributed between two data centers (main – Data Center and backup – Disaster Recovery Center), it is possible to enforce proper broker placement to increase system availability. The nodes are also placed in the backup center, which improves the availability of our installation.

For this purpose, it is worth using affinity configuration, which allows control over where specific cluster nodes are deployed.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

…

Another key element in ensuring reliability is the ability to restore the system after a failure and create backups. Several methods are available in this regard:

Option 1:

Some users utilize the Kafka MirrorMaker tool, which allows data replication between Kafka clusters. In this model, there is a primary cluster and a backup cluster—located in a different location. MirrorMaker is used to synchronize data between these clusters. In the event of a primary cluster failure, traffic will be redirected to the backup cluster.

Option 2:

For installations on an OpenShift cluster, where Kafka cluster disks are provided by OpenShift, there is an option to back them up (Persistent Volume Claim) using snapshots. One of the tools enabling this is Commvault.

In one of Inteca’s projects, we tested whether it was possible to back up Kafka cluster PVCs without shutting down its nodes. The backup process itself was successful, but data recovery proved problematic and did not always succeed. The main challenge was data consistency after restoration.

To effectively use such backup tools, it is advisable to shut down the Kafka cluster during the snapshot creation process. While this results in a short-term system downtime, it can significantly increase the reliability of the data restoration process.

Option 3:

A commonly used method for backing up Apache Kafka is to send data to S3. In this approach, an S3 sink connector is used within Kafka Connect, which retrieves data from topics and sends it to S3 (the S3 source connector is disabled):

After restoring data from a backup, connectors return to their original settings. It should be noted that changes in the state of the connectors during and after the backup restoration are manual operations — administrative tasks.

The approach described above is a commonly used solution for creating backups for Apache Kafka. However, it is important to pay attention to one crucial aspect, namely the proper restoration of data and the recovery of offsets for consumer groups.

After restoring data from the backup, the connectors return to their original settings. This is a popular approach to the backup and recovery process for Apache Kafka. However, one crucial aspect must be considered: proper data restoration and the recovery of consumer group offsets:

- For data restoration, it is essential to ensure that data is assigned to the correct partitions in the correct order. If this fails, consumer groups may start reprocessing already processed data or, in some cases, fail to process certain data altogether.

- The second key aspect is preserving information about where a given consumer group finished processing, i.e., maintaining offset information for each topic partition.

We will illustrate this with an example:

The consumer reads data from topic 1. From partition 0, it has fetched and processed message 0, just as it has for partition 1. After processing, the consumer informs the broker that these messages have been processed, and this information is stored in a special internal Kafka topic named __consumer_offsets.

Message 1 from partition 0 has not been acknowledged, meaning that the group offset for this partition is lower than the offset of this message.

If, after a failure, we read data from the backup, and during the restoration process, messages switch partitions or change order, the stored information about where each consumer finished processing will become useless. Moreover, using this incorrect data can lead to errors.

For example, if after recovery, partition 1 contains both the purple and orange messages, while partition 0 contains only the green message, the following problems may occur:

- The green message will never be processed, because the offset for this partition indicates that message with offset 0 has already been processed—even though it actually has not been.

- The orange message, which has been incorrectly placed in partition 1 instead of partition 0, may have offset 1. In the context of the stored offset for this partition, this means that it must be processed—even though it was already processed earlier.

Thus, it is critical that during restoration, both the correct partition assignment and message order are maintained. Additionally, the processing state of each consumer group, i.e., their offsets, must be correctly restored.

Which connectors can be used to implement such a backup?

Confluent provides dedicated connectors, such as Confluent S3 Source Connector. However, it is important to note that these require a license.

An alternative is open-source connectors like Apache Camel S3 Sink and Apache Camel S3 Source, but they have some implementation limitations:

- They do not save partition information, meaning the original partition of a message is lost.

- Each message is stored as a separate object in S3, which increases storage costs and complexity.

Because of these limitations, using them in production requires customization, such as implementing a dedicated message transformer for the sink connector (Single Message Transformations). This transformer would propagate partition and offset information to S3. Additionally, a second transformer for the source connector would be required to use the partition and offset information stored in S3 to correctly restore messages.

We have now covered aspects related to high availability and backups. Let’s move on to monitoring our Kafka installation in a production environment.

Monitoring Kafka Operations

For any event-driven communication system, it is crucial to monitor its production performance to prevent issues such as data loss, processing downtimes, or insufficient processing capacity.

Due to its architecture and operation model, Apache Kafka requires monitoring of several key aspects, including:

- Cluster health,

- Resource utilization,

- Replication,

- Connectors,

- Processing performance.

Apache Kafka can expose this information to Prometheus (https://prometheus.io/), from where the data can be retrieved and visualized using tools like Grafana (https://grafana.com/).

To implement such monitoring for a Kafka cluster deployed on OpenShift, a PodMonitor can be defined. This is an entity from Prometheus Operator, which is built into the OpenShift platform:

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: kafka-resources-metrics

labels:

app: strimzi

spec:

selector:

matchExpressions:

– key: “strimzi.io/kind”

operator: In

values: [“Kafka”, “KafkaConnect”, “KafkaMirrorMaker”, “KafkaMirrorMaker2”]

namespaceSelector:

matchNames:

– {{ .Release.Namespace }}

podMetricsEndpoints:

– path: /metrics

port: tcp-prometheus

interval: 1m

relabelings:

– separator: ;

regex: __meta_kubernetes_pod_label_(strimzi_io_.+)

replacement: $1

action: labelmap

…

After uploading this definition and configuring Grafana, we will obtain the following view:

It contains information such as:

- Number of replicas,

- Number of In-Sync Replicas,

- Detailed data about partitions,

- Number of messages published per second,

- Number of messages processed by a consumer group per second,

- Lag, which is the difference between the current message offset in topic partitions and the offset processed by the consumer group. The higher the lag, the worse it is, as it may indicate that the consumer group is unable to keep up with message processing.

Additionally, alerts can be defined based on Prometheus Alert Manager or Grafana Alerting, allowing notifications about issues to be received.

Another recommended tool in this area is Redpanda Console. This tool allows verification of cluster status, connectors, consumer groups, and topics:

Additionally, this tool allows for performing active operations, such as:

- Restarting a connector,

- Deleting messages from a topic,

- Deleting a topic.

All these functionalities are useful for daily Kafka maintenance and administrative operations.

Equipped with monitoring tools, we can now move on to the final topic: high processing performance.

High Processing Performance

Event-driven communication systems must be capable of high-performance data processing. These systems integrate and transmit messages between various other systems, and slow message processing can lead to serious issues, especially in real-time or near-real-time solutions.

As mentioned in the architecture section, an important factor in ensuring processing efficiency is choosing the appropriate number of partitions for topics. The number of partitions determines the maximum number of threads that can process data from a topic in parallel. However, this is not the only parameter to consider.

Kafka handles massive amounts of data, which are constantly transmitted between clients and brokers as well as between brokers during replication. Therefore, it is crucial that network overhead does not cause performance issues. Network communication overhead has a major impact on overall processing efficiency. Apache Kafka provides several parameters to manage this:

- Compression (compression.type) – allows enabling compression on the producer side, such as a connector or stream application. Compression can significantly reduce the size of transmitted data. Kafka supports the following compression types: none, gzip, snappy, lz4, zstd.

- By default, compression is set to ‘none’ (no compression). This is not recommended for production deployments, unless there is a justified reason for such a configuration.

- Compression is enabled on the Kafka producer side. Consumers do not require special configuration, and decompression is handled automatically by Kafka client libraries.

- Enabling compression adds processing overhead for both the producer and consumer, but despite this, it significantly increases producer throughput.

- Batch size (batch.size) – another producer-side parameter that defines the size of message batches sent by the producer. The default value is 16KB.

- This means that if messages exceed 16KB, each message will be sent separately, increasing network overhead and negatively impacting throughput.

- It is better to send messages less frequently in larger batches—therefore, it is advisable to adjust this parameter according to the message size used in the system.

- Linger time (linger.ms) – another producer-side parameter, which is closely related to batch.size.

- This parameter defines how long the producer waits before sending a batch.

- If the batch is filled with messages before this time expires, it is sent immediately.

- If the batch is not full but the linger time expires, it is sent anyway.

- The default value is 0ms, meaning messages are sent immediately, which can increase network overhead and reduce throughput efficiency.

Below is an example from one of the implemented systems:

As seen in the attached charts, the system utilizes three stream applications.

- One of these applications (yellow chart) processes around 1,250 messages per second.

- The green application processes 750 messages per second.

- The blue application processes the fewest—below 500 messages per second.

As a result, the blue application has the highest lag (visible on the chart to the right), which continually increases. This may lead to delayed data delivery to the target systems.

After modifying the parameters as follows:

- compression.type=lz4 for all producers,

- batch.size=128KB for all producers,

- linger.ms=100ms for all producers,

we obtained the following results:

On the chart showing the number of messages consumed per second (left side), the graphs of all applications overlap, indicating that these applications are now processing nearly the same number of messages per second.

On the chart displaying the lag of individual consumers (right side), it is visible that none of the lags are increasing—they remain at a low and stable level, which is the desired behavior.

The result is remarkable because between the first and second test runs, no other parameters were changed, no modifications were made to the application, and the test was conducted on the same servers. The impact of just these three parameters on throughput is significant.

The parameter values presented above are not a reference standard or the only correct solution. In each case, the values of these parameters may differ, and they should be selected thoughtfully, based on performance testing and the specific requirements of the solution.

Additionally, before tweaking these parameters, we should first ensure the efficiency of our applications. In the Kafka context, applications—especially streaming applications—should process messages very quickly, in the range of milliseconds or a few dozen milliseconds.

Longer processing times may result in a situation where even fine-tuning the above parameters does not yield any improvements.

Summary

Apache Kafka is a solution that can be successfully used to provide efficient and secure event-driven communication. In the article, I tried to address various functional areas that are the foundation for the operation of such systems and must be considered during their implementation. I hope that the information gathered here will allow you to use Kafka effectively. The aim of the article was to share real project experiences, and the fact that Kafka performs excellently in many aspects is solely its merit and is not a form of product placement 😊.

Learn more about Inteca’s Apache Kafka Managed Service