Apache Kafka is the backbone of modern event-driven systems and real-time data pipelines. But deploying Kafka on Kubernetes isn’t just a matter of spinning up a few pods. Kafka’s stateful nature, reliance on disk caching, and sensitivity to network changes demand a thoughtful approach. This guide walks you through deploying production-ready Kafka K8S using the Strimzi Operator—Inteca’s preferred, GitOps-friendly method for regulated and enterprise-grade environments.

Why running Apache Kafka on Kubernetes is challenging

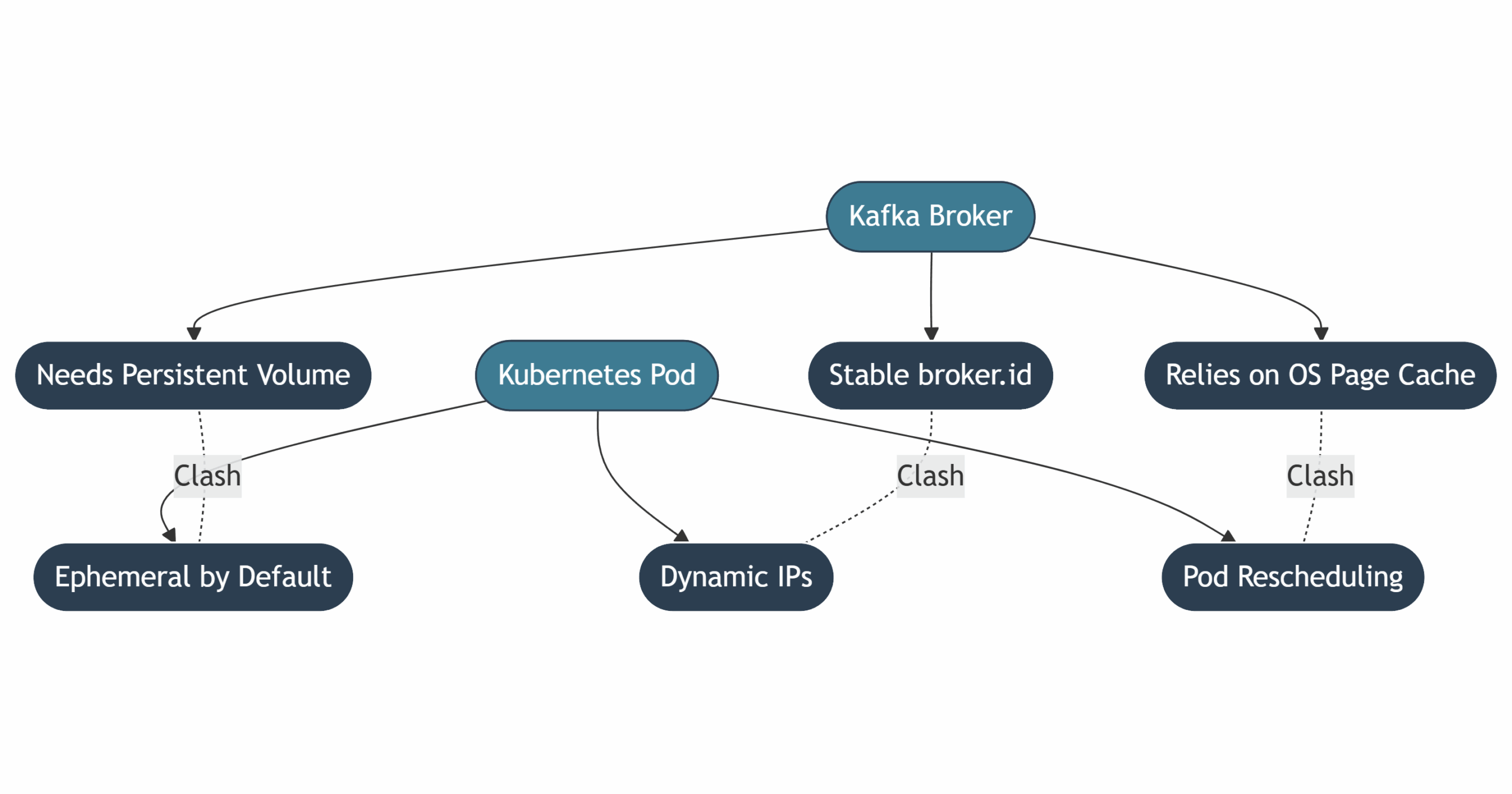

Kafka was not originally designed with container orchestration in mind. Deploying Apache Kafka on Kubernetes introduces several challenges that require a deep understanding of both Kafka internals and Kubernetes mechanics.

Apache Kafka’s stateful architecture vs Kubernetes’ stateless deployment model

Kafka brokers are stateful components. They depend on stable identities (broker.id), persistent volumes for logs, and consistent network addressing. Kubernetes, on the other hand, favors stateless workloads where pods are ephemeral and easily rescheduled. Without proper configuration, this can lead to data loss, incorrect broker behavior, or performance degradation.

Page cache in Kafka Broker performance

Kafka achieves high throughput by relying on the operating system’s page cache to serve messages directly from memory instead of disk. When pods are rescheduled or restarted, this cache is lost, leading to increased disk I/O and latency. Ensuring that pods remain stable and stateful is essential for maintaining Kafka’s performance profile.

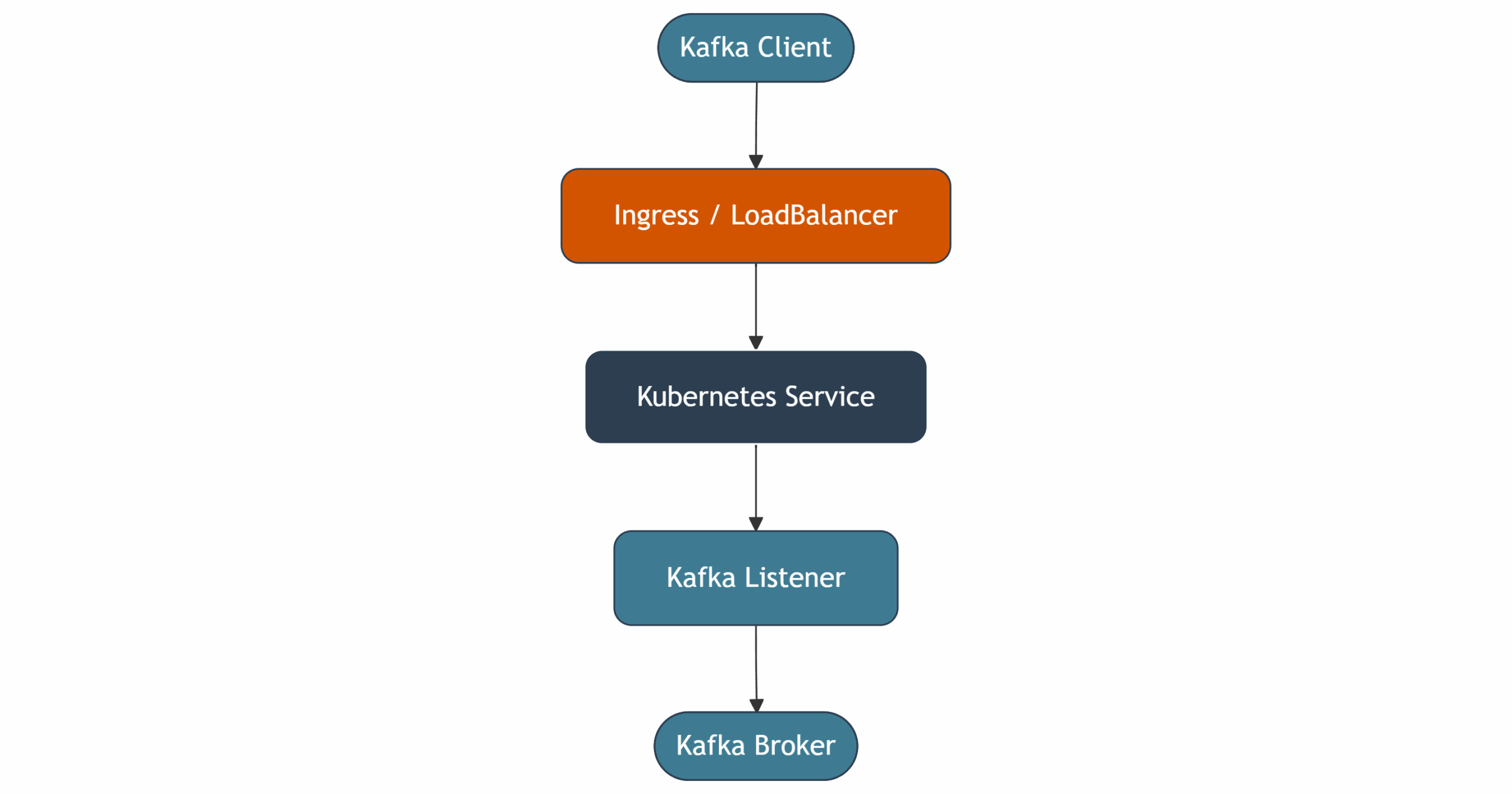

Networking, listeners, and exposing Kafka

Kafka clients connect via advertised listeners, which expose specific hostnames and ports. Mapping these listeners correctly using Kubernetes Services or Ingress controllers can be tricky, especially in multi-tenant or cross-cluster environments. Misconfigurations can result in connection timeouts, mismatched IPs, and failed message delivery.

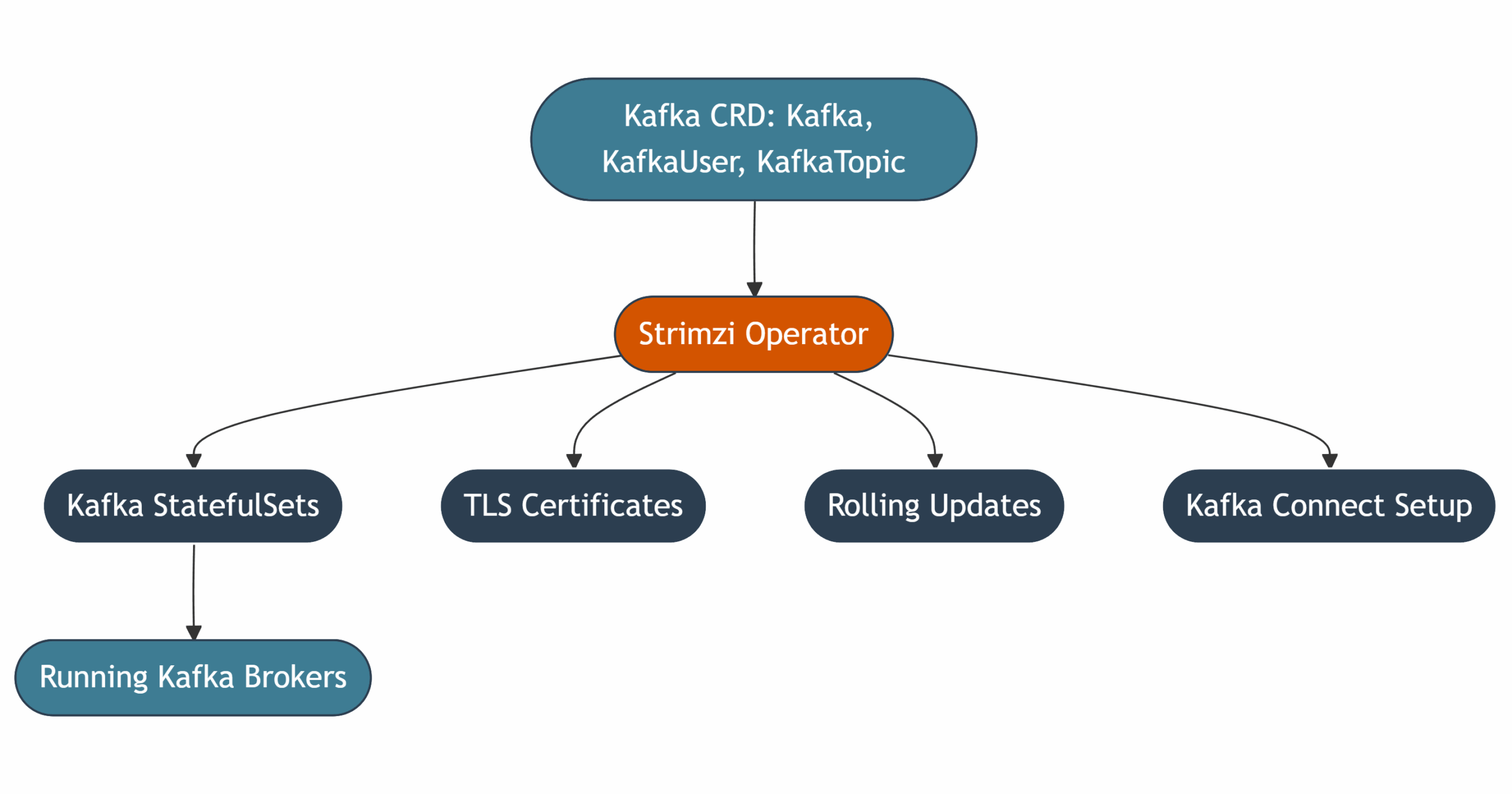

What is Strimzi? Deploying Apache Kafka K8S the right way

Strimzi is an open-source Kubernetes Operator purpose-built to simplify Apache Kafka deployment and lifecycle management. It encapsulates complex operations such as broker orchestration, rolling upgrades, certificate management, and CRD-based Kafka configuration into a Kubernetes-native workflow.

Why use Strimzi to run Kafka on Kubernetes?

Strimzi provides a declarative, GitOps-compatible interface to Kafka. It includes:

- Custom Resource Definitions (CRDs) for Kafka, Topics, Users, Connect, Bridge, and more

- Seamless TLS certificate generation and renewal

- Compatibility with OAuth2, SCRAM, and TLS-based authentication

- Support for both open-source and Red Hat-backed Kafka distributions

By integrating with Kubernetes-native tooling, Strimzi makes it easier to standardize Kafka operations across environments and automate Day-2 tasks such as monitoring, scaling, and disaster recovery.

Kafka K8S architecture (Strimzi-Based)

A Strimzi-managed Kafka deployment consists of several core components:

- Kafka Cluster: Includes brokers (with or without ZooKeeper) and their configuration.

- Strimzi Operator: Reconciles declared resources into actual running components.

- Entity Operators: Manage Kafka users and topics.

- Kafka Connect: Handles external data integration via connectors.

- Monitoring stack: Integrates Prometheus and Grafana for observability.

+-------------------+ +---------------------+

| Kafka Clients | <---> | Kafka Cluster CRD |

+-------------------+ | (Brokers + Zookeeper or KRaft)

+----------+----------+

|

+----------------v----------------+

| Strimzi Operator |

| Reconciles Kafka, Topics, etc. |

+---------------------------------+

|

+----------------------+----------------------+

| | |

+-------v------+ +-------v-------+ +--------v--------+

| Kafka Connect | | Kafka Topics | | Kafka Users |

+--------------+ +---------------+ +-----------------+

Monitoring: Prometheus + Grafana Auth: TLS/OAuth2 + KafkaUser

This architecture allows Kubernetes-native orchestration of the entire Kafka ecosystem, improving reproducibility, resilience, and operational consistency.

Kubernetes deployment prerequisites to run Apache Kafka

Before starting, ensure you have the following:

- A running Kubernetes 1.21+ or OpenShift 4.x cluster

- Helm 3 installed (optional but recommended)

kubectlorocCLI access- A namespace created for Kafka components (e.g.,

kafka) - Cluster role permissions to install CRDs and Operators

How to deploy Apache Kafka on Kubernetes with Strimzi

The following steps describe how to deploy Kafka using Strimzi. This approach supports both development and production environments with minimal configuration drift.

1. Install the Strimzi Operator to manage Kafka

Installing the Operator sets up the control loop responsible for reconciling Kafka resources.

You can install it via kubectl or Helm:

kubectl create namespace kafka

kubectl apply -f 'https://strimzi.io/install/latest?namespace=kafka' -n kafka

Or with Helm:

helm repo add strimzi https://strimzi.io/charts/

helm install kafka-operator strimzi/strimzi-kafka-operator -n kafka

2. Deploy a Kafka Cluster (ZooKeeper-Based)

Once the operator is running, you can create your Kafka cluster using a custom resource:

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

...

This CRD defines a 3-broker Kafka cluster with durable storage and TLS-encrypted internal communication.

3. Create topics declaratively

Kafka topics can be defined as YAML manifests, making them reproducible across environments:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaTopic

...

This ensures topic creation is part of your infrastructure-as-code workflow.

4. Configure Kafka users with TLS authentication

Strimzi simplifies user management through CRDs, allowing you to enforce access control declaratively:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaUser

...

You can create TLS, SCRAM, or OAuth2-authenticated users with precise ACLs.

5. Set up Kafka Connect

Kafka Connect enables integration with external systems (databases, cloud storage, etc.). With Strimzi, you can run Connect as part of your cluster:

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnect

...

This configuration also supports automatic TLS and plugin configuration via container images.

6. Enable Monitoring with Prometheus + Grafana

Observability is crucial for running Kafka in production. Strimzi integrates with the Prometheus Operator using a PodMonitor:

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

...

Strimzi provides pre-built Grafana dashboards to visualize broker health, throughput, latency, and more.

Secure your Kafka cluster

Security is a first-class citizen in Strimzi deployments. You can enforce best practices easily:

- Enable mutual TLS for internal communication

- Use TLS or SCRAM for client authentication

- Define RBAC via KafkaUser and ACLs

- Integrate with enterprise SSO using OAuth2 and Keycloak

These configurations ensure compliance with security standards in regulated environments.

Day-2 operations with Strimzi

After deploying Kafka, ongoing operations are just as important.

Rolling upgrades

Strimzi supports version upgrades with zero downtime by modifying the Kafka spec:

spec:

kafka:

version: 3.6.0

The operator will orchestrate rolling restarts, preserving broker states and data.

Autoscaling and rebalancing

For dynamic workloads, you can add brokers and rebalance partitions using Cruise Control, which is supported by Strimzi as an add-on component.

Kafka disaster recovery and backup

Use Kafka Connect S3 Sink connectors to export topic data, or leverage persistent volume snapshots combined with offset backups.

Final thoughts

Kafka on Kubernetes is no longer experimental—with the right tools like Strimzi, it’s a stable, scalable, secure option for modern data platforms. Organizations benefit from declarative infrastructure, observability integration, and improved DevOps velocity. For enterprises in regulated industries, adding SLA-backed support like Inteca’s ensures peace of mind.

Whether you’re running Kafka on OpenShift or upstream Kubernetes, this operator-driven approach bridges the gap between developer agility and platform stability.

Why choose Inteca to deploy and manage Kafka on Kubernetes?

Inteca is a European-based Kafka and OpenShift expert with deep specialization in Kubernetes-native, GitOps-aligned deployments of Apache Kafka. Our platform-first approach is trusted by banks, telecoms, and public sector organizations that need enterprise resilience without hyperscaler lock-in.

With Inteca, you get:

- Fully managed Kafka deployments using Strimzi or Red Hat Streams for Apache Kafka

- 24/7 SLA-backed support with senior engineers (Bronze to Platinum tiers)

- Early Life Support (30-day onboarding) with proactive tuning and monitoring

- Built-in security (TLS, OAuth2, KafkaUser RBAC) and observability (Prometheus, Grafana)

- OpenShift-native integration and GitOps workflows using Helm and CRDs

- Transparent pricing—no surprise costs for throughput or storage

If you’re ready to operationalize Kafka with production-grade reliability, Inteca is the partner to trust.

Ready to run Kafka on Kubernetes with security, scalability, and expert support built in?